Anachro-mputing blog

retro and not-so-retro computing topics

Related links:

Updates

|

2026 Jan 12 MIDI to VGM translator, beta 5

Update to this program:

added -r switch for reserved channels

can specify output filename

fixed SN76 drums using chan 3 freq (which was never set to anything in particular)

fixed header endianness on X68

looks for ISC subdirectory in the directory where executable exists (except in Linux)

songs are now allowed to key-on a note when volume is zero (apparently this is a thing)

XM2MID crash fix, support for EEx effect, and 'songloops' option

OPNZ fixes

added OPLL2MID to the archive (converts YM2413 .VGM to MIDI)

MID2VGM-beta5 download

|

|

2026 Jan 10 even more NOWUT bugs

The new 'appgetpath' routine in the latest PIODOS.NO, contained some long conditional jumps

which should have been short jumps. It still works fine on 386 and above, but crashes on 286 or

below because long conditional jumps don't exist on 286 or below. This is another item which

will need to be fixed in the next release.

|

|

2026 Jan 9 yet more bugs

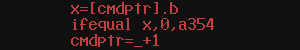

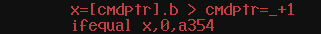

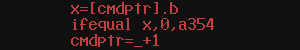

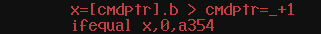

A few programs I posted in source form (eg. vgz2vgm, vgmtos98, s98tovgm) have a bugged variant

of the routine 'readcmdstr' which can return garbage data if it is called more times than there

are command line arguments.

This part:

should have been this instead:

should have been this instead:

|

|

2025 Dec 24 NOWUT bugs

I discovered a rarely occurring bug when compiling for SuperH. A datadump that exists during the

first pass can be eliminated during the second pass, caused by long jumps getting optimized to short

jumps, and as a side effect the counter for datadumps does not advance and the list of datadumps

from the first pass is no longer tracked correctly. A subsequent datadump may then overflow. This will

need to be fixed in the next release.

|

|

2025 Dec 12 ADPCM encoder bugs

Turns out the program I posted on May 30th is buggy, mainly in calculating the sample

length, but also the encoding algorithm. I attempted to correct it with this:

; oldsampl=y*z.sd shr 2.sb+_

oldsampl=y shl 1+1*z sar 3+_

Oddly enough, it doesn't sound much different. I'm not convinced this is correct either.

|

|

2025 Nov 26 NOWUT version 0.35 release

This release has several minor fixes and improvements. 8086 code is now faster due to a change in

how shifts are done. NOWUT86 compiling itself is nearly 10% faster. The main addition is a set of new

routines in the PIOxxx modules. 'memrequest' and 'memreturn' allow programs to allocate and free memory

blocks at runtime. 'appgetpath' allows a program to locate files in the same directory as its executable,

regardless of the current directory. These routines may become useful in future releases of eg. the

disassemblers.

(In PIOGEM and PIOLNX, appgetpath only returns a null string. I can't find a reliable way to

obtain this information in EmuTOS, and the *nix people are ideologically opposed to it.)

Check the documentation.

Download the complete archive.

And be sure to obtain Go Link from Go Tools website.

|

|

2025 Nov 16 updated text/hex editor: RaeN 0.92

changelog:

added tab expansion option for files formatted with tabs

added SHIFT+F3/F4 'find selection' option

fixed a problem with files being saved in the wrong directory

CTRL+C can copy text to the Windows clipboard

download RaeN 0.92 here (win32 only)

(see the Oct 2022 posting for the Shift-JIS font and additional info)

|

|

2025 Nov 9 Megasquirt post-mortem (Part Two)

Megasquirt, at least in its original configuration, has two outputs to control fuel injectors.

When there are more injectors than this, they'll be separated into two batches, with the injectors

in each batch being connected in parallel. (In my case there are a total of six injectors.)

The software can be configured to turn on both injector batches at the same time, or alternate from

one to the other. On a four-stroke engine, each injector has to operate at least once per two engine

rotations, though operating more than once is also possible. There are pros and cons to each option,

for instance:

using one simultaneous squirt results in the most latency between when the pulsewidth is determined

and when the final cylinder actually draws in the air/fuel mixture. It can also cause fluctuations

in fuel rail pressure.

using double or triple squirts means that one or more cylinders will get a fraction of the proper

fuel dose when transitioning into or out of 'overrun' (fuel cut)

Since a single 1MHz timer is used to control both batches, a bit of juggling needs to happen in

'alternating' mode when the pulsewidths overlap. The original code had a minor bug where a 16-bit

subtraction didn't account for the borrow, which could throw off the timing by 256 microseconds. In

the situation where pulsewidths are long enough to overlap, 256uS is unlikely to be a significant

error. Nevertheless, I have fixed the bug, which took more code than one might expect because the

timer registers require reading the high byte first for some reason.

Considering all of that, I was leaning toward using '2 squirts alternating' but my new acceleration

algorithm doesn't operate optimally in alternating mode. I would have needed to find some

more free memory locations to keep track of two offsets at the same time. Instead, I continued to run

with '2 squirts simultaneous'.

Latest source and Megatune files for

TJ's mod, version M30-3e

|

|

2025 Oct 26 Megasquirt post-mortem (Part One)

A decade ago, I posted my slightly modified version (known as M30-3d) of MS1 Extra firmware, which is the

68HC908 assembly code powering the (original, 8-bit) Megasquirt fuel injection controller. I used it thereafter

to run a BMW M30B32. Last year the MS went dead suddenly and I had to pull it out to diagnose and repair. I

decided to revisit the firmware code while I was at it.

The main modification that I had made was a new acceleration algorithm (which I called NMA) to replace the

official one. The reason I did this was because the official one relies on a throttle position sensor. My

engine didn't have a TPS, and I didn't want to add one.

What is an 'acceleration' algorithm? It's all about how the fuel injection responds to changes in engine

load. As long the engine speed, load, and temperature remain the same, the amount of fuel injected

(as determined by the opening duration of electronic fuel injectors) can also remain the same. (There are

some additional variables which play a lesser role, like fuel quality and atmospheric conditions.)

When engine load is changing, there are yet more confounding factors. How much latency is there between

measuring the change in load and the next injection cycle? How much fuel remains in the intake port in

between intake cycles? How much fuel that was condensed in the intake port at a higher manifold pressure

will vaporize when the manifold pressure goes down, or vice versa? My NMA code was intended to temporarily

inject additional fuel whenever manifold pressure (MAP) increased, or inject less when MAP decreased. This

is similar to what the official TPS accel does. But it turns out there were problems with my implementation.

problem 1: Part of the code was inside an interrupt handler, whereas most of it was in the

'calcrunningparameters' routine which loops at an arbitrary speed, running asynchronously with engine speed.

A shared variable changed by the interrupt code at the wrong time could cause unintended behavior of the main

code. *facepalm*

problem 2: Once the code measured a change in MAP, it calculated an offset to be applied to injection

duration. Unfortunately, this value was then 'locked in' until the injection happened, and further changes

in MAP during this time would not be immediately accounted for.

problem 3: This may or not be a real problem, but I started to think my ADC sampling code should

be redesigned to be more fault tolerant. Most of the MS code consists of testing flags, branching, and

relatively simple calculations, and most incorrect results caused by hardware failure would have a good

chance to be corrected during the next iteration of the same code. Part of my code used a counter as an

address offset to write to a table. Possibly an unnecessary risk?

I believe I've corrected these problems, at least for the case when both injector banks are activated

simultaneously. The algorithm could be further improved when using alternating injector banks, but I'll

address that more in the next post.

|

|

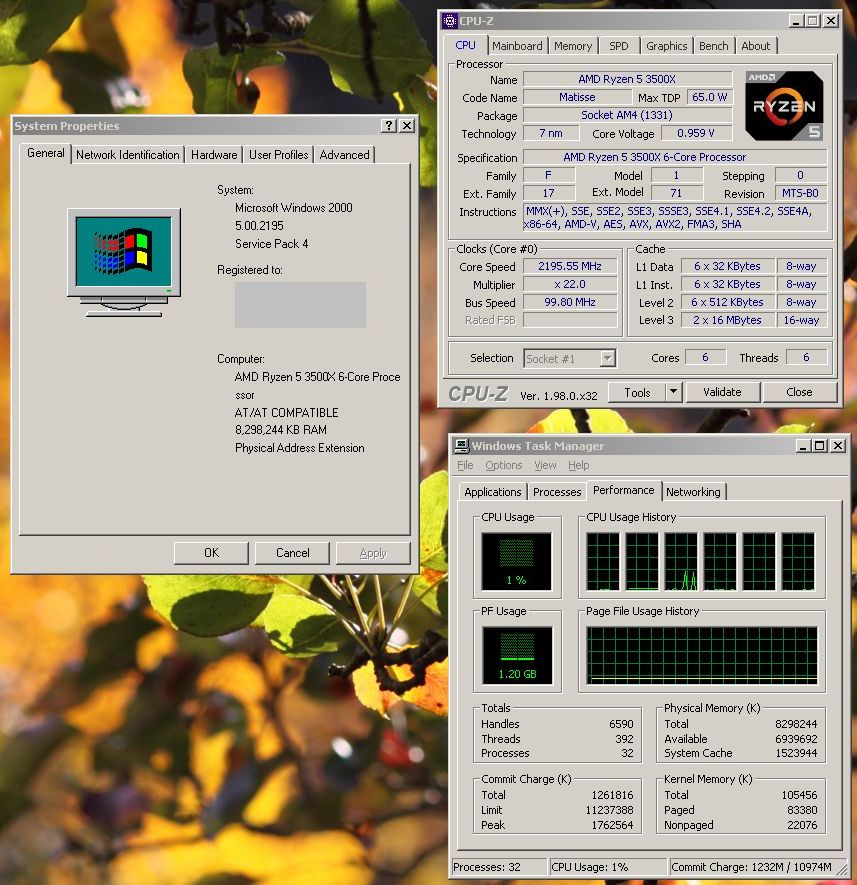

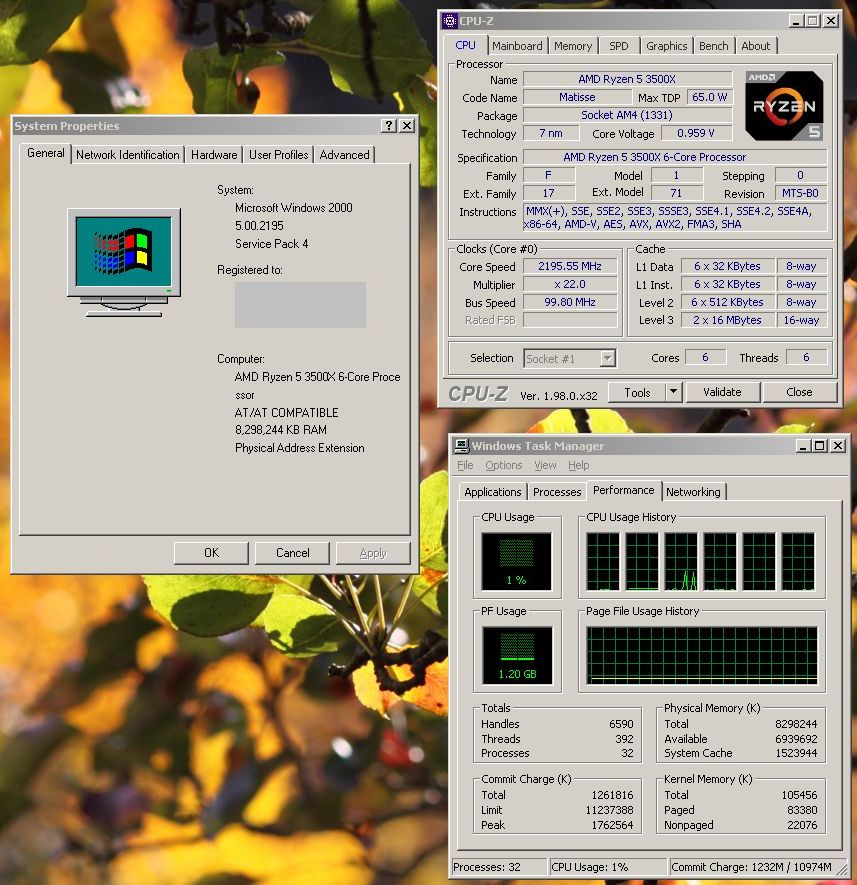

2025 Jul 26 upgrading the hardware and keeping the software

Reinstalling Windows has long been a popular "solution" to various problems (real or perceived), even

though it's an utterly terrible thing which should be avoided at all costs. I am happy to go a decade or

more without reinstalling if I can help it. Why? Because a new install requires one to fix all of the

crummy default settings, reinstall all of the drivers and codecs, and deal with various applications which will quit

working because their crap went missing from the registry. (And that's assuming that you didn't 'reformat'

and thereby delete all the applications, another popular but strange practice.)

But sometimes when you try to boot an existing Windows install on a new motherboard, it's FUBAR. You

can monkey around all day with windbg and regedit and maybe after that it's still FUBAR and you have to

admit defeat. What then?

Running Windows 2000 on my new motherboard requires new ACPI and AHCI drivers from Windows XP Integral

Edition, plus a hacked HAL, and NTOSKRNL extender. I set it up in a separate case to experiment with it until

I could get a working install, so I could continue to use my main machine with the old motherboard.

(Aside: AHCI has been a giant boondoggle, resulting in uncountable wasted man-hours worldwide due to its

incompatibility with PCI IDE. It had a negligible performance impact and is now rendered moot by SSDs.)

Could I get my old Windows 2000 install to boot on the new motherboard by inserting the new drivers?

Unlikely. In fact, I tried to make it boot by inserting the driver for a JRAID PCIe SATA/IDE card and

failed at that. So I didn't attempt doing it with AHCI.

Instead, when the time came I just renamed WINNT to WINNTold, and copied the WINNT directory from the

new install. Keeping the DOCUME~1 directory around maintains the NTUSER.DAT registry hive and some

application data, which solves many of the issues with a new install. Drivers still need to be reloaded,

but keeping around the old WINNT directory can be useful for the sake of digging out OEMx.INF files and

whatnot. I also exported the old SOFTWARE hive to a text file so I could extract various parts with

NOTEPAD and import them again into the new registry. This was crucial for keeping the font settings I

had setup for running Japanese games.

Some applications still had problems, and it was difficult to know which registry entries, if any,

were the cause. For instance, Canon DPP now failed silently when I tried to launch it. Going through

a trace with Ollydbg, I found a failed call to CoCreateInstance. So yes, the problem was with the

registry. But rather than try to hunt down the right ones, I just installed a dummy copy of DPP by

running DPP100-E.EXE, and now stuff works again. (The installer for DPP 3.14.15 refuses to run on

Windows 2000, even though the application itself works.)

Windows 2000 is now ready to go. But I have a dual boot with Windows 7. The old PC had two HDDs, but

I've replaced one of them with an SSD and rearranged the partitions. Windows 7 was not booting anymore.

Unlike Windows 2000 which contains boot settings in a plain text file, BOOT.INI, Windows NT 6 and later

moved the settings to a binary blob called BCD. BCD is like a registry hive, and can be edited, but there

is little progress to be made by editing it because the configuration is also obfuscated by GUID nonsense.

The only way I know to fix this thing is by booting a Windows 7 DVD and requesting it to do a repair. So I

did that. Except the DVD I have is "Home Premium" whereas the OS installed was "Professional" and so it

declined to do the job. BUT! After I copied over a dummy install of "Home Premium" to the same HDD, then

the DVD went ahead and fixed the BCD so it could boot both "Home Premium" or "Professional". (Though

I only need one, and the "Home Premium" is already being used on another machine.)

Well, not quite. Windows 7 could begin to boot, but still didn't know to load AHCI drivers, since the

old motherboard was in IDE mode. Thankfully this isn't too hard to fix in Windows 7.

After booting from the DVD, you can press Shift+F10 to get a command prompt. Run regedit. Use 'load hive'

to access the Windows 7 SYSTEM hive. Find Services\MSAHCI, and set 'Start' to '0'

|

|

2025 Jun 6 Snooper SSD benchmark

I happened to run Snooper with a SATA SSD connected and got a result far beyond any HDD or compactflash

card.

By contrast, I think the video benchmark topped out somewhere around this level back in the socket 7 era.

The CPU benchmark is a small loop of 16-bit code, so it isn't terribly relevant on modern CPUs (this one

being a Zen 2), but here are some historical results for comparison:

8088 at 4.77MHz = 2.1

8086 at 7.16MHz = 4.3

286 at 6MHz (IBM AT) = 6.7

386DX at 25MHz = 45

486 at 100MHz = 274

|

|

2025 Jun 1 partition realignment with a sector editor

I connected a new SSD and created a partition with Windows 2000. The entry in the MBR (beginning at

$1BE) initially looked like this:

00 01 01 00 0C FE FF FF 3F 00 00 00 DB F8 CE 1D

This is the traditional (non)alignment where things begin at sector 63. Bytes 1,2,3 indicate head 1

(the second head, since the first is 0), sector 1 (the first sector, since 0 is not a valid sector

number), and cylinder 0. Bytes 8~11 contain a dword representation of the sector number, which

agrees with the CHS address.

The newer custom for flash-based storage media is to align on a 1MB boundary. That means sector

2048, rather than 63. My modified partition entry is this:

00 20 21 00 0C FE FF FF 00 08 00 00 DB F8 CE 1D

The final dword is the size of the partition. You might expect that it will need to be decreased

when the beginning of the partition is moved ahead. But in this case, Windows had left a few MB of

unused space at the end of the 'disk' anyway, so I left the size as it was.

After that I ran FAT32FORMAT on it. Later, if I try to make it bootable, I might have to revisit

the MBR to flag the partition as bootable.

|

|

2025 May 30 ADPCM encoder (MSM6258)

Here is a Win32 program to convert an uncompressed, mono .WAV file to ADPCM, and another program to

play back the resulting sample on X68000. With NOWUT source.

download here

|

|

2025 May 3 new release of P-ST8 utility (with Zen support)

This Windows program for CPU performance control has been extended to support early Ryzen CPUs.

In theory, it now supports desktop CPUs ranging from Phenom to Zen 3, though only a small subset

of these processors have actually been tested. Note that voltage readings are still incorrect on

Ryzen.

download here

|

|

2025 Mar 24 vgmstrip

This is a quick and dirty program to filter a .VGM file so that it contains only YM2151, YM2203,

YM2608, YM2612, or SN76489 data. That means files that also contained PCM data will shrink, which can

be useful when playing them with OPNZ which runs from conventional memory and doesn't play PCM anyway.

vgmstrip - DOS EXE and source

|

|

2025 Mar 2 NOWUT utility to fix the checksum in a (32-bit) PE file

Generally the checksum is ignored, but in the case of Windows drivers and certain other system

files it does matter. Drivers with a wrong checksum will be rejected by Windows while booting.

This utility runs in DOS, which is handy because under DOS you can modify files that would

otherwise be locked while Windows is running.

PECHK - DOS EXE and source

|

|

2025 Feb 28 6x disassemblers

This is a new release of NOWUT-based disassemblers. A disassembler for Z80/Z280, 1eighty, has been

added. The x86 disassembler, PEon, has been updated with fixes and new capabilities.

There's a nuisance that is particular to x86 and Z80 code, and that is misaligned disassembly. It

doesn't happen on architectures that use fixed-length instructions, and is possible but much less frequent

on 68000 where instructions are at least 16 bits and aligned on a word boundary.

When there is data mixed in with code, it's hard to know where the data ends and code begins on

x86 or Z80. Any single byte value could be the beginning of a valid instruction. There are multibyte

values which don't result in a valid instruction, but generally not enough of them to distinguish

between code and eg. an ASCII string. Strings mostly disassemble to LD instructions on the Z80. On x86,

a variety of things will come out, like ARPL, conditional branches, INC and DEC, prefixes, etc.

The worst thing is when the beginning of a routine gets mangled. For instance, a $00 byte before a

PUSH EBP instruction causes it to be disassembled instead as ADD [EBP-$75],DL.

PEon has several new checks designed to avoid this problem. The letter 'E' is very common in English

text, but the byte $65 (a GS segment override) is very uncommon in real code. Not only that, but a $65

before an INC/DEC register instruction or a conditional branch serves no purpose, so it becomes safe

enough to disqualify $65 $43 or $65 $74 as being legitimate code.

Win32 builds, i386 Linux builds, and source:

6x disassemblers

|

|

2025 Feb 25 Cloudflare = fartcloud

Cloudflare is in a race with Google to see who can ruin the web more quickly. More websites are now

inaccessible behind a fartcloud wall, or unusable due to including unwanted fartcloud malware that does

nothing useful while BLATANTLY LYING about "checking the security" or some such.

Who came up with that idea? Was having their javascript garbage display a message that said "cleansing

your aura" or "renewing your spirit energy" thought to be unsatisfactory? Or was it commanded from above

that the magic word "security" must be included, because anything that happens on a computer is

guaranteed to be legitimate when it contains the magic word?

As long as they're committed to lying, why not say "checking the security of your neighborhood" instead?

Web users would be so pleased to know that their neighborhood was secure. Or you could make it more inclusive:

"checking the security of your community." Wow! I love javascript now! It's securing my community!

Please, fartcloud, inject some more of your javascript!

|

|

2025 Jan 28 a few more wrong x86 opcodes

The 80x87 instructions FSTCW, FNSTCW, and FSTP (80-bit version only) assemble and disassemble

incorrectly in the current versions of NOWUT and PEon.

PEon also fails to disassemble some MOVs to/from control and debug registers. It will do so only when

the 'mod' field is 0, while documentation suggests that the mod field is actually ignored for these

instructions and can be any value.

|

Old updates

entries from 2023-2024

entries from 2021-2022

entries from 2020 and prior

should have been this instead:

should have been this instead: